Adobe Develops AI That Can Detect If Faces Were Manipulated In Photoshop

The world is becoming increasingly anxious about the spread of fake videos and pictures, and Adobe — a name synonymous with edited imagery — says it shares those concerns. Today, it’s sharing new research in collaboration with scientists from UC Berkeley that uses machine learning to automatically detect when images of faces have been manipulated.

It’s the latest sign the company is committing more resources to this problem. Last year its engineers created an AI tool that detects edited media created by splicing, cloning, and removing objects.

“THE ALGORITHM SPOTTED 99 PERCENT OF EDITED FACES IN ADOBE’S TESTS”

The company says it doesn’t have any immediate plans to turn this latest work into a commercial product, but a spokesperson told The Verge it was just one of many “efforts across Adobe to better detect image, video, audio and document manipulations.”

“While we are proud of the impact that Photoshop and Adobe’s other creative tools have made on the world, we also recognize the ethical implications of our technology,” said the company in a blog post. “Fake content is a serious and increasingly pressing issue.”

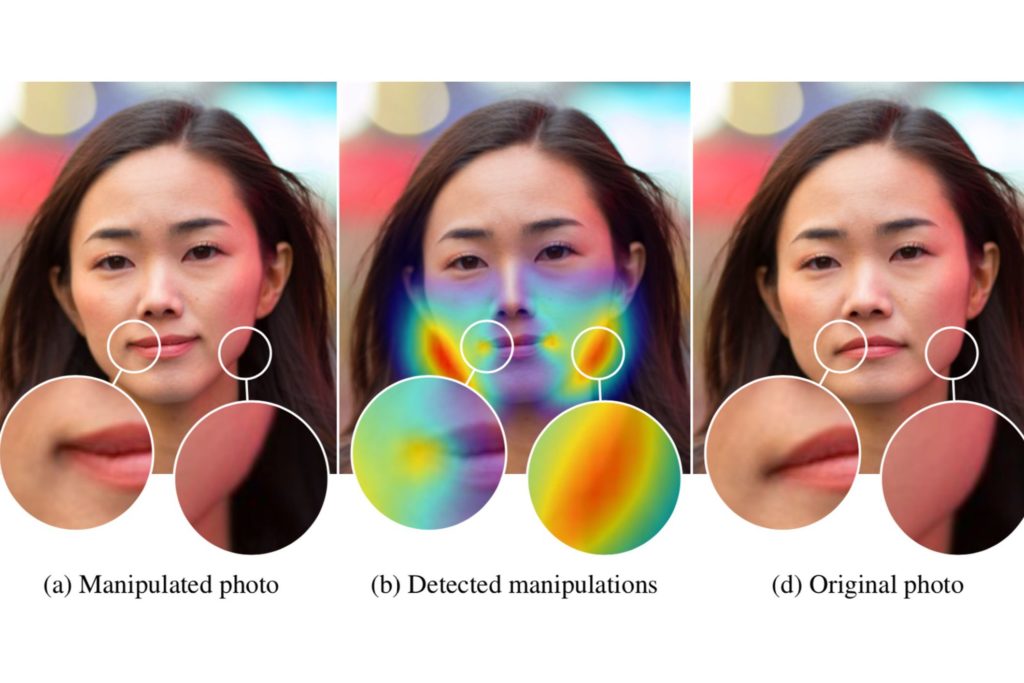

The research is specifically designed to spot edits made with Photoshop’s Liquify tool, which is commonly used to adjust the shape of faces and alter facial expressions. “The feature’s effects can be delicate which made it an intriguing test case for detecting both drastic and subtle alterations to faces,” said Adobe.

To create the software, engineers trained a neural network on a database of paired faces, containing images both before and after they’d been edited using Liquify.

The resulting algorithm is impressively effective. When asked to spot a sample of edited faces, human volunteers got the right answer 53 percent of the time, while the algorithm was correct 99 percent of the time. The tool is even able to suggest how to restore a photo to its original, unedited appearance, though these results are often mixed.

“The idea of a magic universal ‘undo’ button to revert image edits is still far from reality,” Adobe researcher Richard Zhang, who helped conduct the work, said in a company blog post. “But we live in a world where it’s becoming harder to trust the digital information we consume, and I look forward to further exploring this area of research.”

The researchers said the work was the first of its kind designed to spot these sort of facial edits, and constitutes an “important step” toward creating tools that can identify complex changes including “body manipulations and photometric edits such as skin smoothing.”

While the research is promising, tools like this are no silver bullet for stopping the harmful effects of manipulated media. As we’ve seen with the spread of fake news, even if content is obviously false or can be quickly debunked, it will still be shared and embraced on social media. Knowing something is fake is only half the battle, but at least it’s a start.

Credit: Adobe